SPRAC21A June 2016 – June 2019 OMAP-L132 , OMAP-L138 , TDA2E , TDA2EG-17 , TDA2HF , TDA2HG , TDA2HV , TDA2LF , TDA2P-ABZ , TDA2P-ACD , TDA2SA , TDA2SG , TDA2SX , TDA3LA , TDA3LX , TDA3MA , TDA3MD , TDA3MV

-

TDA2xx and TDA2ex Performance

- Trademarks

- 1 SoC Overview

- 2 Cortex-A15

- 3 System Enhanced Direct Memory Access (System EDMA)

- 4 DSP Subsystem EDMA

- 5 Embedded Vision Engine (EVE) Subsystem EDMA

- 6 DSP CPU

- 7 Cortex-M4 (IPU)

- 8 USB IP

- 9 PCIe IP

- 10 IVA-HD IP

- 11 MMC IP

- 12 SATA IP

- 13 GMAC IP

- 14 GPMC IP

- 15 QSPI IP

- 16 Standard Benchmarks

- 17

Error Checking and Correction (ECC)

- 17.1 OCMC ECC Programming

- 17.2 EMIF ECC Programming

- 17.3 EMIF ECC Programming to Starterware Code Mapping

- 17.4 Careabouts of Using EMIF ECC

- 17.5 Impact of ECC on Performance

- 18 DDR3 Interleaved vs Non-Interleaved

- 19 DDR3 vs DDR2 Performance

- 20 Boot Time Profile

- 21 L3 Statistics Collector Programming Model

- 22 Reference

- Revision History

6.2 DSP CPU Observations

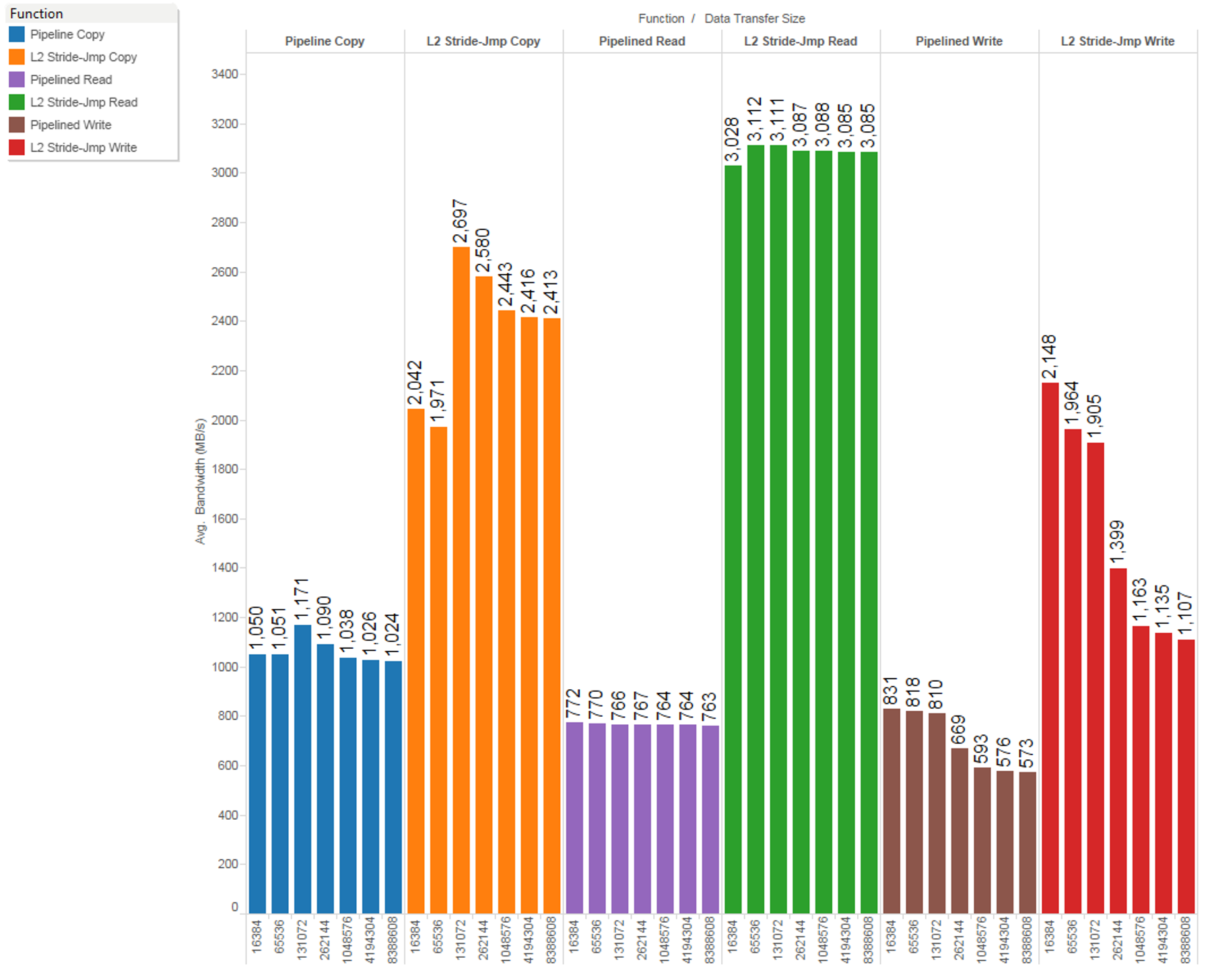

Figure 24 gives the average DSP bandwidth in MBps (y-axis) measured for the different functions introduced above, for different data sizes of 16 KiB, 64 KiB, 128 KiB, 256 KiB, and 8 MiB (x-axis) for the L1D and L2 cache size of 32 K and 128 K, respectively. The given bandwidth was measured with prefetch enable, MMU off, and L1D write back policy enabled. Each cache line fetch for 128 bytes is actually two VBUS commands for 64 bytes.

Figure 24. DSP CPU Read and Write Performance With Different Data Sizes to DDR

Figure 24. DSP CPU Read and Write Performance With Different Data Sizes to DDR For a memcpy() type operation, there are both reads and writes. Furthermore, the L2 cache write-allocates. For buffer sizes that fit entirely within L2, the traffic at the MDMA boundary will look like two streams of reads. For buffer sizes larger than L2, there is a third stream consisting of victim writes. That’s why the numbers start falling off as the data sizes get above 128K. The reads do not show this trend as the cache lines do not become dirty and the cache would not perform a write back of the cache line when the data sizes are larger than the cache line.

The L2 pipeline functions generate more L2 cache line fetches and write backs in a shorter time span leading to a higher throughput.

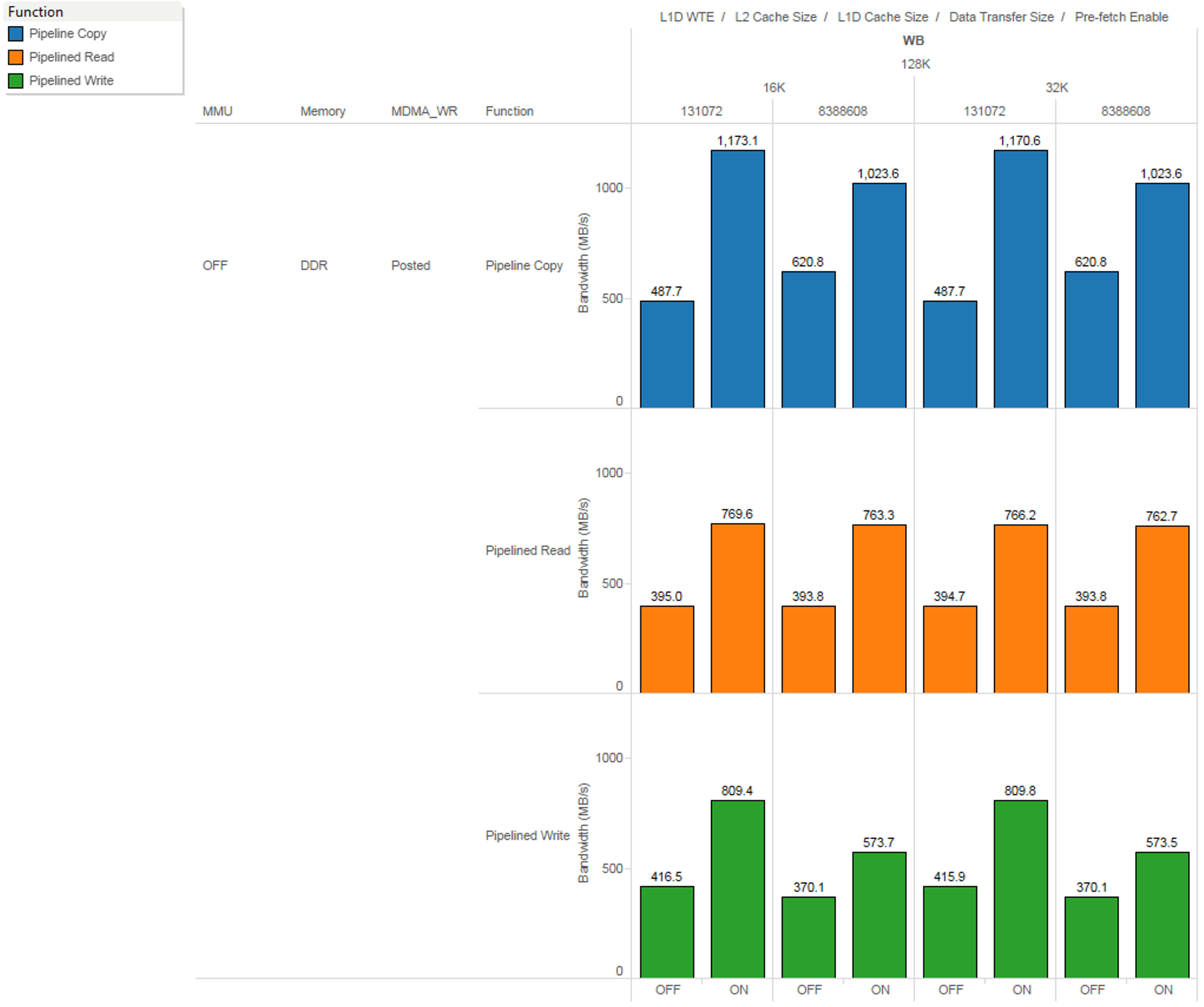

The L2 memory controller conveys to the XMC whether a given address range is pre-fetchable. This information comes directly from the “PFX” field in the corresponding MAR register. Figure 25 shows the effect of pre-fetch ON versus OFF for DDR transfers with MMU off, MDMA Posted writes and L1D write back policy enabled. The XMC pre-fetcher does not distinguish read-allocate from write-allocate; it will try to pre-fetch for either to speed things up as seen by the ~2x performance increase with pre-fetch ON versus OFF for both read and write streams.

Figure 25. Impact on Prefetch Enable versus Disable on CPU Performance

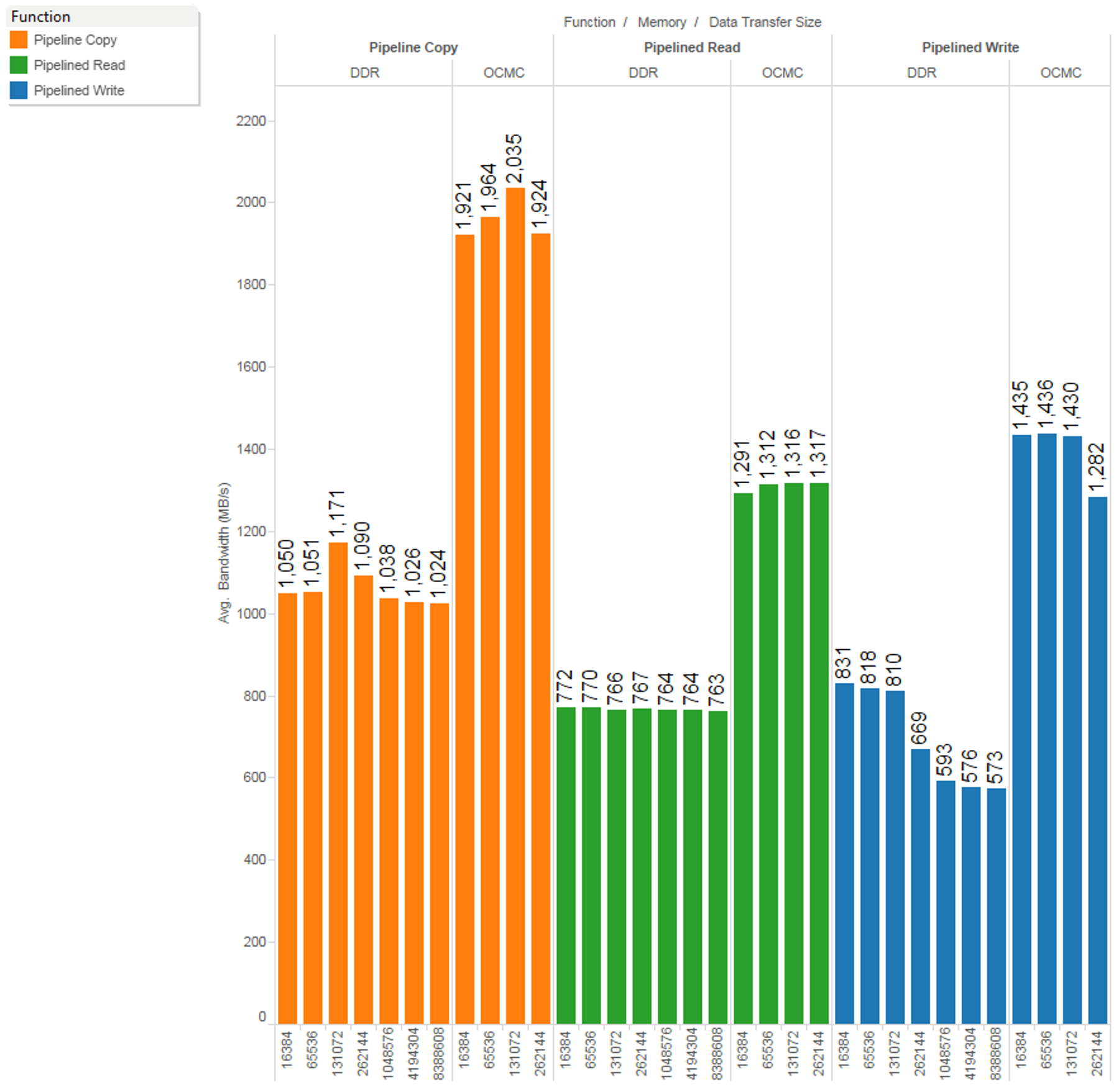

Figure 25. Impact on Prefetch Enable versus Disable on CPU Performance The DSP CPU read and writes throughput varies with the source and the destination of the buffer. Figure 26 shows the difference in bandwidth obtained when the data is transferred from DDR-to-DDR versus OCMC RAM-to-OCMC RAM for different data transfer sizes, with pre-fetch enabled, L2 cache size of 128K, and L1D of 32K with L1D write back policy enabled, MMU off and non-posted writes at the MDMA boundary for cached data.

Figure 26. Impact of Source and Destination Memory on DSP CPU RD-WR Performance

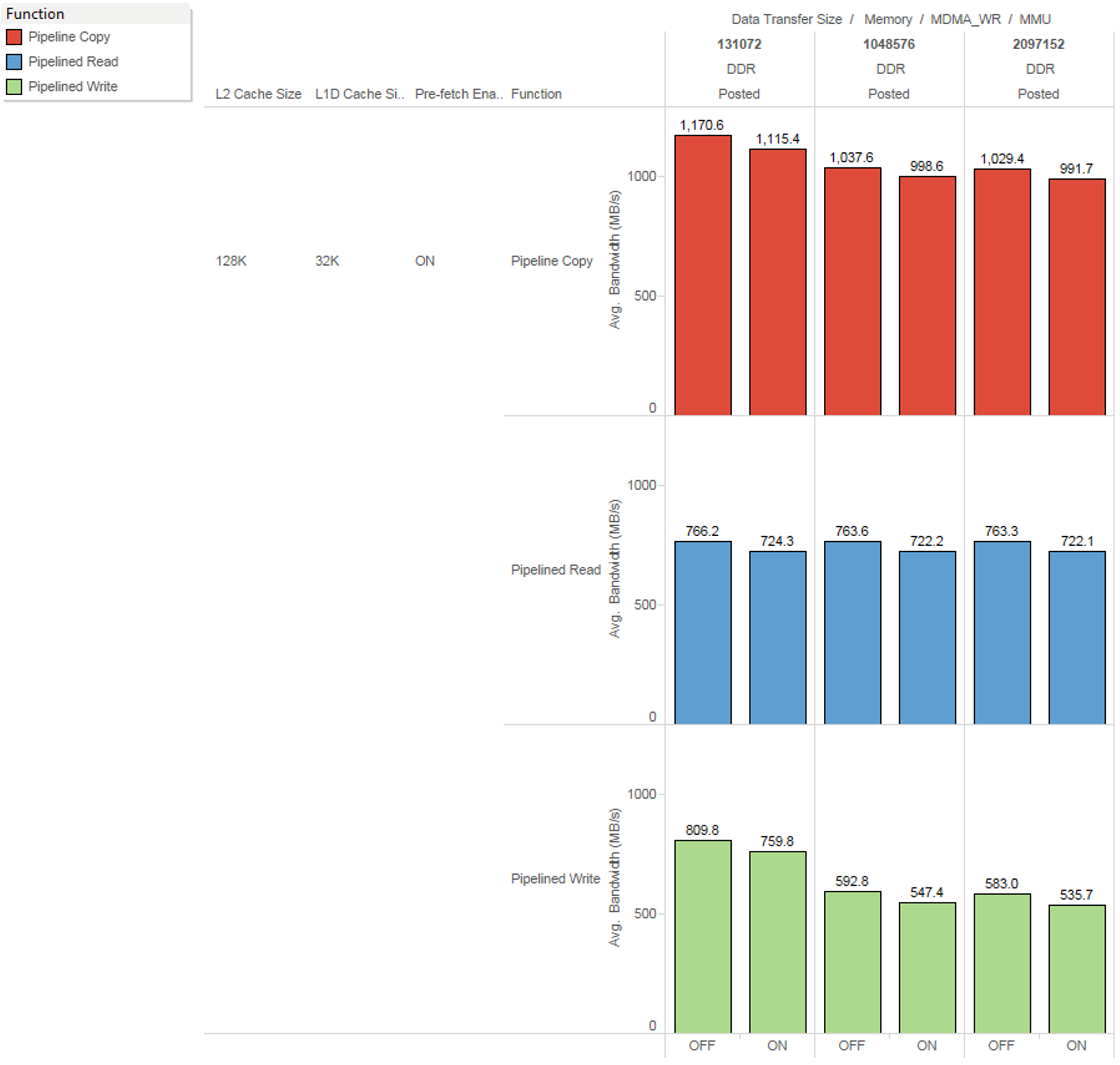

Figure 26. Impact of Source and Destination Memory on DSP CPU RD-WR Performance A standalone memory management unit (DSP_MMU0) is included within the DSP1 (DSP1_MMU0) and DSP2 (DSP2_MMU0) subsystems boundaries. The DSP_MMU0 is integrated on the C66x CPU MDMA path to the device L3_MAIN interconnect. This provides several benefits including protection of the system memories from corruption by DSP1 and DSP2 accidental accesses. Figure 27 shows the effect of MMU off versus MMU on, with pre-fetch enabled, L2 cache size of 128K, and L1D of 32K with L1D write back policy enabled and posted writes at the MDMA boundary for cached data. The MMU adds to the latency in the path leading to slight drop in the throughput. (16MB Page size in TLB)

Figure 27. Impact of MMU Enable on DSP RD-WR Performance

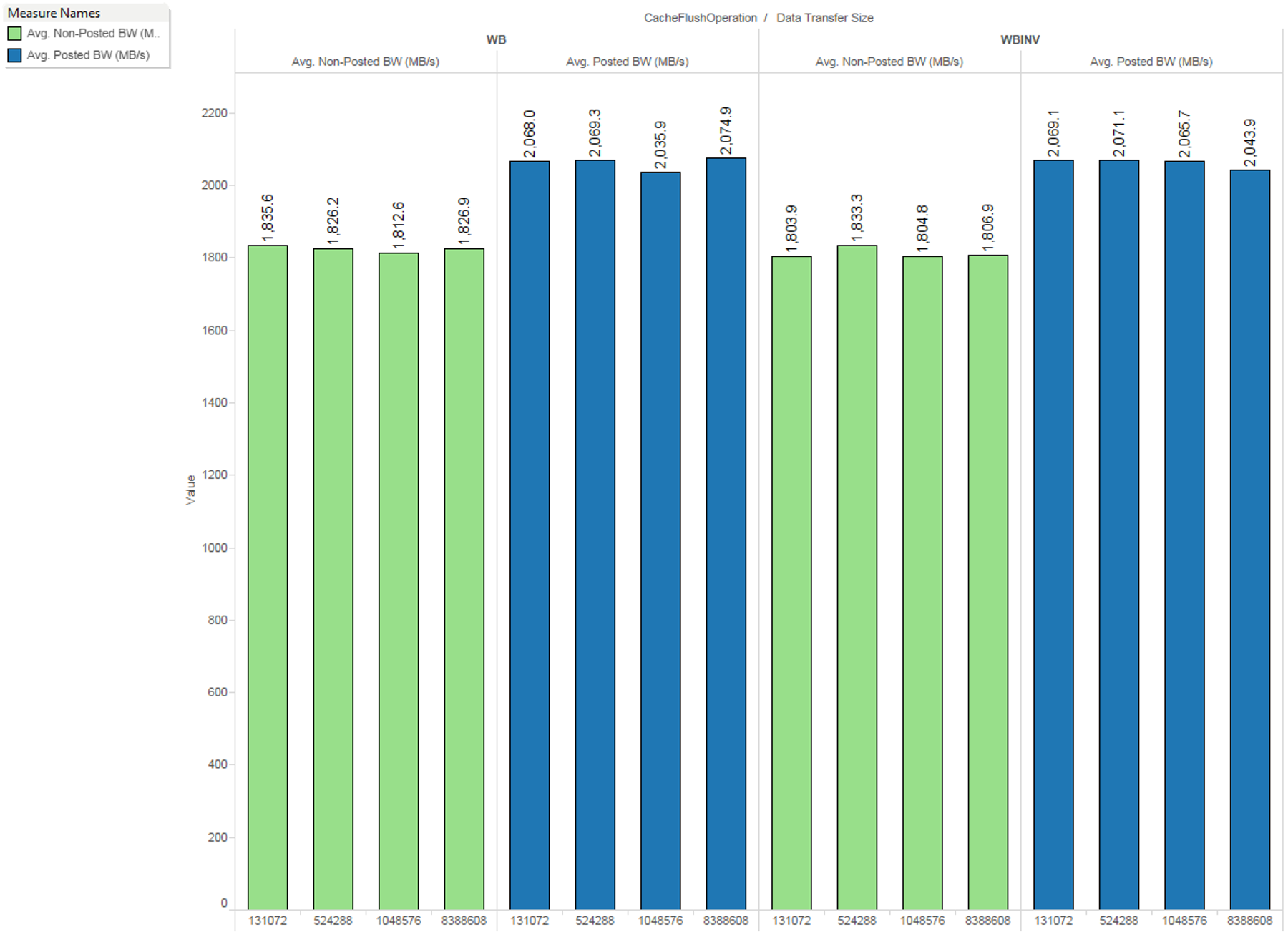

Figure 27. Impact of MMU Enable on DSP RD-WR Performance The C66x CorePac submits writes denoted as either “cacheable” or non-cacheable. Write accesses that are non-cacheable will be submitted as interconnect (L3_MAIN) non-posted writes; whereas, write accesses that are cacheable are submitted as interconnect posted writes based on the configuration of the C66xOSS_BUS_CONFIG. Figure 28 gives the comparison of the posted versus non-posted writes when measuring bandwidth of the cache flush operation while transferring data to DDR with pre-fetch enabled, L2 cache size of 128K, and L1D of 32K with L1D write back policy enabled.

Figure 28. Impact of Posted and Non-Posted Writes on DSP Cache Flush

Figure 28. Impact of Posted and Non-Posted Writes on DSP Cache Flush