SWRA774 may 2023 IWRL6432

3 Development Process Flow

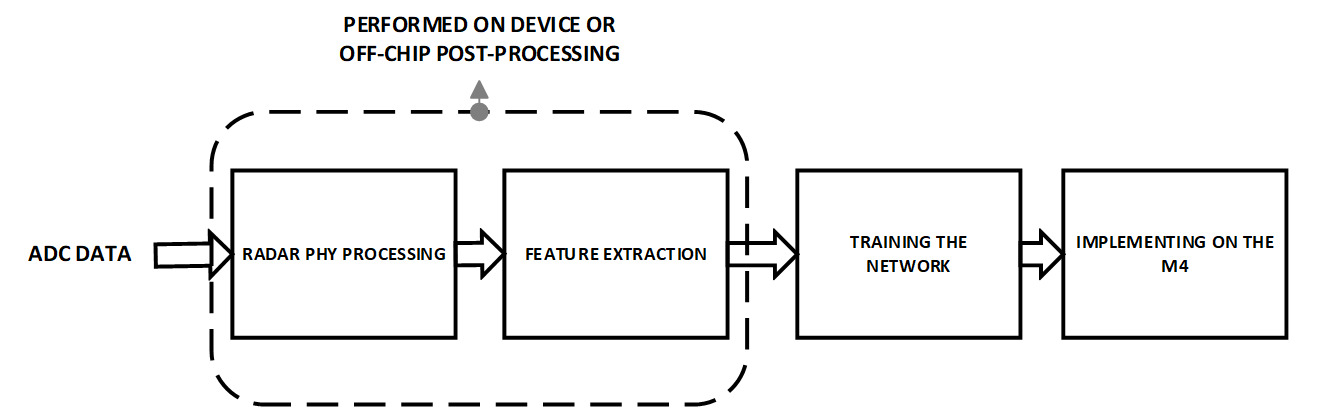

Figure 3-1 Development Flow for an ML-based

Application with mmWave Radars

Figure 3-1 Development Flow for an ML-based

Application with mmWave RadarsThe development flow can be divided into 3 steps:

- Data collection, feature extraction and annotation

- Architecting and training the classifier

- Implementing the classifier on the IWRL6432 (M4F)

- Data collection, feature

extraction, and annotation: Since ML-based classifiers are highly

data-driven, collect a sufficient amount of data in scenarios that are

representative of the use case being considered. There are two ways to conduct a

data collection campaign:

- The Radar PHY processing and feature extraction are done in real-time on the IWRL6432(1) , with the feature vectors being streamed to a laptop

- A data capture board (DCA1000) coupled to the IWRL6432 is used to directly stream raw ADC data to a laptop via Ethernet. The Radar PHY processing and feature extraction is done on the laptop (using for e.g. MATLAB® or Python®)

Since features are a compact representation of the raw ADC data, the former method requires lesser storage on the laptop. The latter method (with access to raw ADC data) enables one to experiment with different radar pre-processing techniques for post data collection.

Accurate annotation or labeling of the data (i.e., associating the features with the right label) is critical. While not always possible, the goal is to automate this process with minimal human oversight. In some cases, the data collection process includes a video stream to record ground truth for labeling purposes.

- Training a classifier: There

are various training options available. KERAS is an open-source library written in python that

provides a plug-and-play framework to quickly build, train and evaluate classifiers.

TensorFlow® and Pytorch® are two other popular open-source frameworks.

For the kind of Edge-AI applications targeted for the IWRL6432, we have found KERAS to be a good choice providing the right

tradeoff between ease of use and performance. MATLAB® also has a licensed Deep Learning Toolbox with an easy to use interface. For

customers with no or limited inhouse ML experience, there is a rich ecosystem of

3rd parties that can support the development of ML applications.

Two things must be kept in mind for robust performance of the classifier:

- The data set must incorporate variations that are expected to be seen in the actual use-case.

- The classifier must be tested with sufficient isolation between the training and test data set (in a motion classification use-case, the training and testing data set can have different users)

- Implementing on the Arm®

Cortex®-M4F: Once the ML network (architecture, weights, and biases) has

been chosen in step 2, the final step is implementing the network in the embedded

system. Some of the options available are:

- Hand-written bare-metal C code (with weights and biases in floating point)

- CMSIS-NN is a software library with neural network kernels optimized for Arm® Cortex®-M4F processor cores. The library supports only quantized weights and biases (8-bit or 16-bit). The library also provides a recipe to quantize the weights and biases of a floating-point network.

- Using TFLite, which is an open-source library developed by Google for deploying machine learning models to embedded devices. This takes a floating-point network as an input and generates a quantized version of the network that can run on an embedded device.

- 3rd parties also provide support in porting classifiers to an embedded environment.